The Avail Testnet is now live. As users begin incorporating Avail into their chain designs, a question that often comes up is, “How many transactions can Avail process?” This is the final piece in a three-part series of articles that will address Avail’s current performance, as well as its ability to scale in the near and long term. You can read Part One here and Part Two here.

The model below describes an architecture where the acts of proposing and building a block (deciding which transactions/blobs to include in the block) are separated and performed by different participants.

By creating this new entity of block builders, the computational work required to generate row commitments, and generate cell proofs can be split between different participants.

Avail does one core thing: it takes in data and spits ordered data out. Think about it like an API. Avail enables anyone to sample for data availability.

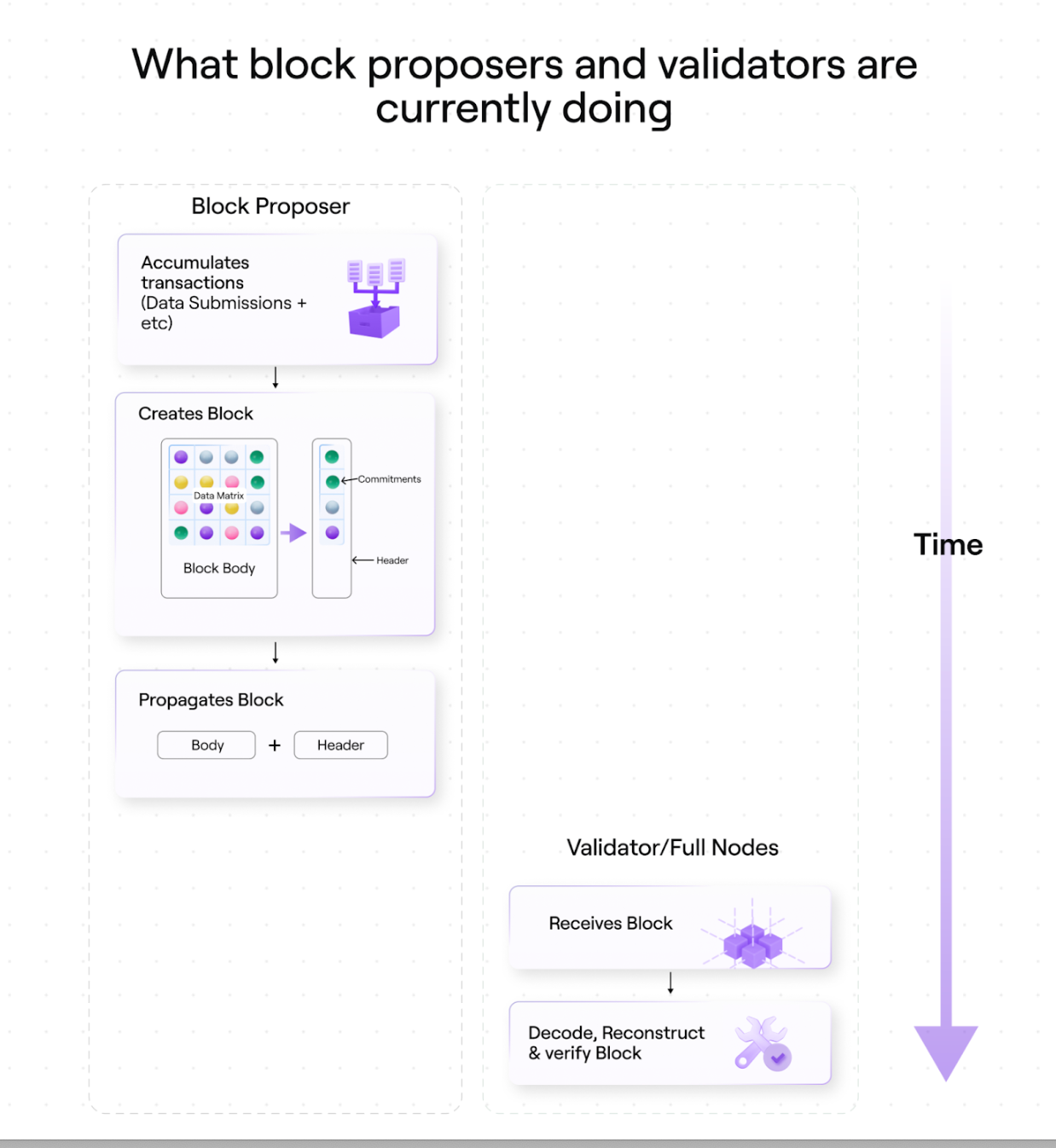

Before highlighting where we can improve, we’ll start by elaborating on exactly what Avail asks from block proposers and validators/full nodes in its current state.

- Block producer creates the block body

- Accumulates transactions (data submissions)

- Orders these transactions into the Avail data matrix, which becomes the block body

- Block producer creates the block header

- Generates commitments for each row of the matrix

- Extends those commitments using polynomial interpolation (the generated + extended commitments become the block header)

- Block producer propagates the block (the body + the header)

- Validators and full nodes receive the block

- Validators and full nodes decode, reconstruct, and verify the block

- Recreate the data matrix

- Recreate the commitments

- Extend the commitments

- Verify all the data they received matches the commitments they generated

Step 5, where full nodes are asked to regenerate the header, is unnecessary in a system like Avail’s.

Full nodes do this currently only because Avail inherits its architecture from traditional blockchains, which need validators to confirm that execution was done correctly. Avail doesn’t handle execution. Block proposers, validators, and light clients alike only care about availability. This means that all participants in the Avail network have the option to use Data Availability Sampling to trustlessly confirm data availability

Because validators and full nodes can sample to check for availability, they are not required to recreate the entire block to guarantee network security.

Validators don't need to check that everything is true by redoing all the things the producer did. Instead, they can check that everything is true by sampling a handful of times. Just as light clients do, when the statistical guarantee of availability is met (after 8 - 30 samples), validators can add that block to the chain. Because Avail does not handle data execution, this is possible to do securely.

Instead of a laborious 1:1 validation process, data sampling offers validators a much quicker alternative. The magic of Avail is that by using the headers alone, anyone (in this case, validators) can reach consensus that they are following the correct chain.

If we can do that, we can replace the entire header recreation step with a few samples.

This shift in what we ask of validators, alongside a handful of other improvements is what will be explored in this piece. We’ll describe one improved system where block proposers (still) create and propagate blocks, but all other network participants interact with the network via data availability sampling. We’ll then introduce a system that takes this one step further, and separates block building, and block proposition so it is conducted by two different network participants.

It’s important to note that these changes are somewhat more advanced, and are still being actively researched.

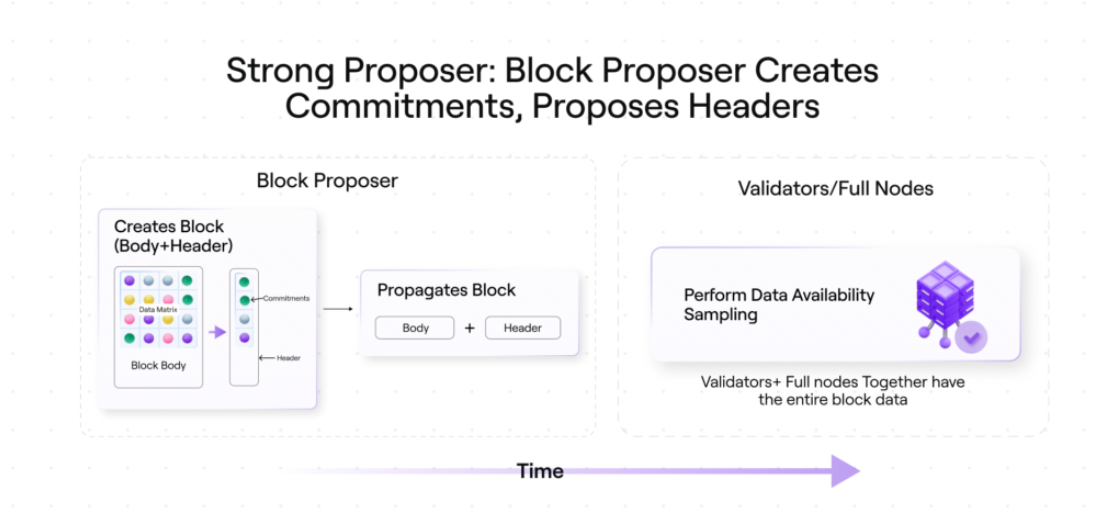

A more efficient model for Avail would have a single node build, and propagate commitments to the network. All other participants would then generate and verify proofs.

This is the first time we're enabling not only light clients, but any part of a chain can do this. We're allowing validators to sample in the same way light clients can sample.

This model has the single validator node which proposes a block creating commitments for all rows of the data matrix, and then only proposing headers.

Step 1: Proposer propagates just the header.

Step 2: Because validators receive only the header, they can’t decode or reconstruct the block. But because they can perform data availability sampling, they don’t need to.

Other validators in this scenario behave like light clients.

These other validators would use the commitments to sample for data availability, and only accept a block if they reach an availability guarantee.

In this world, all nodes will act similarly to light clients. Validators can avoid using block bodies to regenerate commitments just to ensure proper computation by the block producer.

Generating commitments to prove computation is unnecessary when validators can simply rely on proof verification.

It’s possible for full nodes to reach certainty that they’re following the correct chain with headers alone because we don’t need full nodes to verify valid execution of blocks; Avail doesn’t perform execution! We just need proof of availability, which the header (combined with a few random samples) can provide. This allows us to decrease the amount of computation necessary to be a validator.

This has the added benefit of potentially bringing down communication time as well.

Complications

We’re hesitant to commit to this model in the short term because it would require breaking away from the fundamental structure of Substrate. We would need to remove the extrinsic root which breaks all access to Substrate tooling, though it is an improvement we are actively exploring.

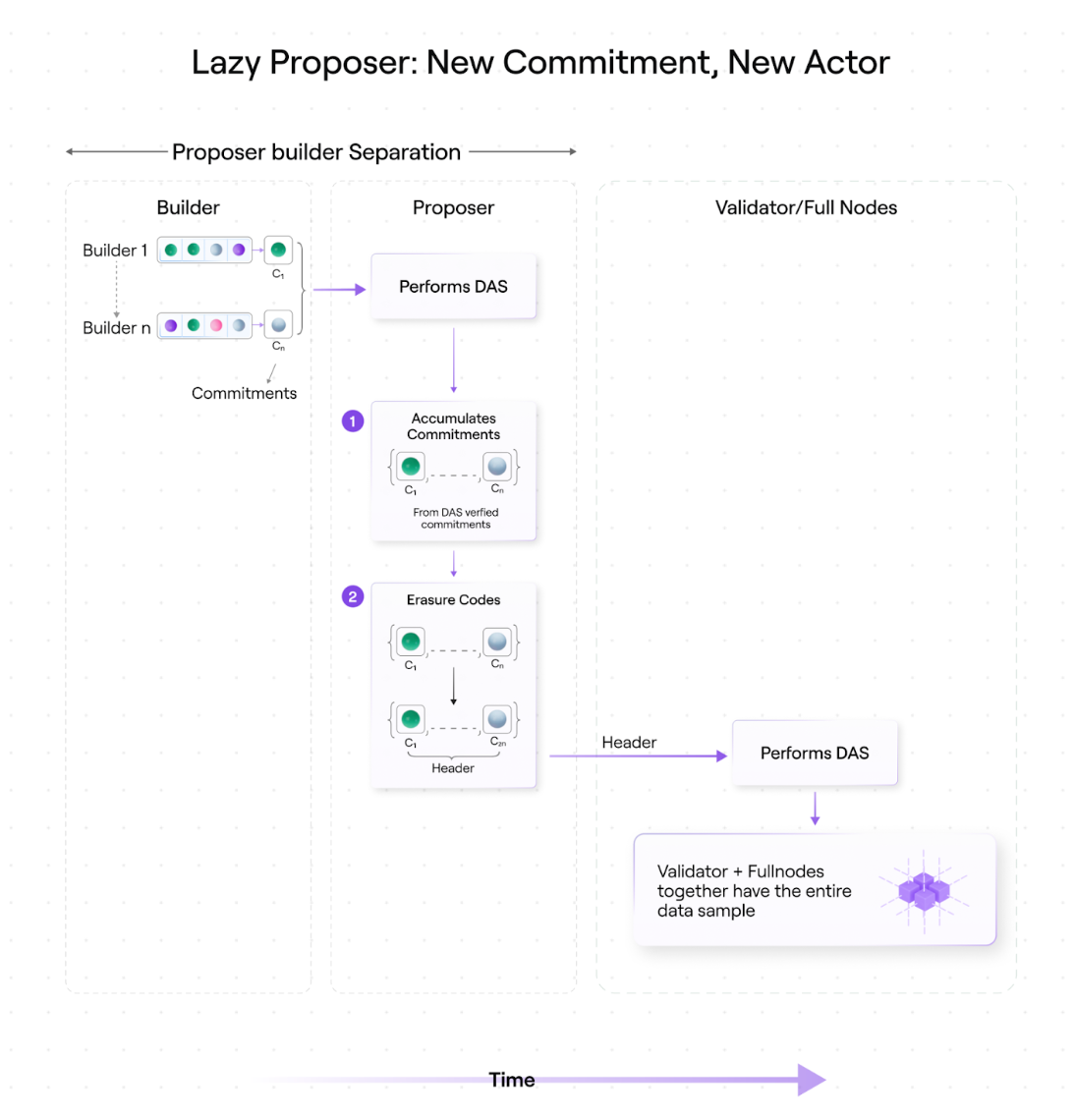

Another model borrows the shard blob model from EIP-4844.

For this system, imagine:

- Each row of a block’s data matrix is built by different builders along with that row’s associated polynomial commitment.

- Builders share their rows with the p2p network and their commitments to a proposer.

- Header Creation: A single-block proposer collects the commitments.

- The proposer samples from the builders (and p2p network) to confirm the given commitments generate valid openings before erasure coding the commitments. This original commitment + extended commitment combo becomes the header.

- The proposer shares this header with validators.

- Proposer and validators perform Data Availability Sampling by sampling random cells from the p2p network (or the builders), and confirming the data generates a valid opening against each cell’s row’s commitment.

- Once validators reach the statistical guarantee of availability, the block header is added to the chain.

The block proposer doesn’t need to do much of the work, as the commitments are generated by many participants.

The lazy proposer model has a single proposer of a block. Actors can then be broken down in a fashion similar to the proposer-builder separation above.

There can be multiple builders creating small chunks of the block. They all send those chunks to an entity (the proposer) that does random sampling on each piece to build a header that it proposes.

The body is built with logical constructs.

An example

What makes the lazy prosper model different is that block builders and block proposers are separate entities.

Suppose four block builders each have one row of the data matrix. Each builder creates a commitment using that row.

Each builder then sends their row and constructed commitment to a designated proposer, who samples data from the body to confirm the given commitments. The proposer then performs polynomial interpolation on the commitments so that they have not just the four originally constructed commitments, but eight commitments. The data matrix has now been erasure-coded and extended.

These eight rows and eight commitments are verified by the same proposer.

In looking at the entire matrix, we can see that half of the rows are built by the proposer (via erasure coding), the other half provided to them.

The producer then proposes a header, which everyone accepts. This results in blocks that look the same as the ones being currently produced by the Avail Testnet, though they are constructed much more efficiently.

The lazy proposer model for Avail is more efficient, but also quite complex. While there are other, lower-hanging fruit opportunities to optimize the system as a whole, the Avail team is excited to explore implementing this model.

Comparing Traditional Blockchain Transactions to the Lazy Proposer Model

The lazy proposer model is not dissimilar from how individual blockchain transactions on non-Avail blockchains are handled today.

Today, when anyone conducts a transaction on nearly any chain, they send a note of that transaction to all peers. Soon, every peer has this transaction in their mempool.

So what do block producers do?

The block producer takes transactions from their mempool, collates them together, and produces a block. This is a block producer’s typical role.

With Avail, the data blobs and their commitments are treated similarly to individual transactions. These blob+commitment combos are propagated on the system, in the same way individual transactions are sent on traditional chains.

Soon enough, everyone will have commitments to the blobs. And with the commitments, a proposer can begin randomly sampling to ensure data availability. With enough confidence from sampling, nodes will extend these commitments, accept the data in the body, and construct the header–thus creating the next block.

Closing

These architectural proposals for Avail are meant to showcase just how impactful separating the Data Availability layer from the rest of a blockchain’s core functions can be.

When Data Availability is handled on its own, optimizations can be made to treat Data Availability as a standalone layer that provides far greater improvements than what could be made when Data Availability is bound to other blockchain functions, like execution.

Whether they’re called Layer 3 solutions, modular blockchains, or off-chain scaling solutions, we’re excited to see what teams dream up with this dedicated Data Availability layer. Teams can rest assured that Avail will be capable of scaling directly alongside any chains or applications built atop it. As we build out a modular blockchain network of 100s of validators, thousands of light clients, and many, many new chains to come, we anticipate no problems with scaling to meet demand.

The Avail testnet is already live, with updated versions on the way. As Avail works toward the mainnet, we’re interested in partnering with any teams looking to implement data availability solutions on their chains.If you want to learn more about Avail, or just want to ask us a question directly, we would love to hear from you. Check out our repository, join our Discord server.

This article was originally published on Polygon's official blog.